The world of artificial intelligence has made remarkable strides, particularly in the realm of creative applications. One such groundbreaking development is SEED-Story, a multimodal long story generation system developed by Tencent ARC. This innovative project harnesses the capabilities of large language models (LLMs) to weave together rich narratives accompanied by visually consistent imagery, opening new horizons for storytelling.

The Magic Behind SEED-Story

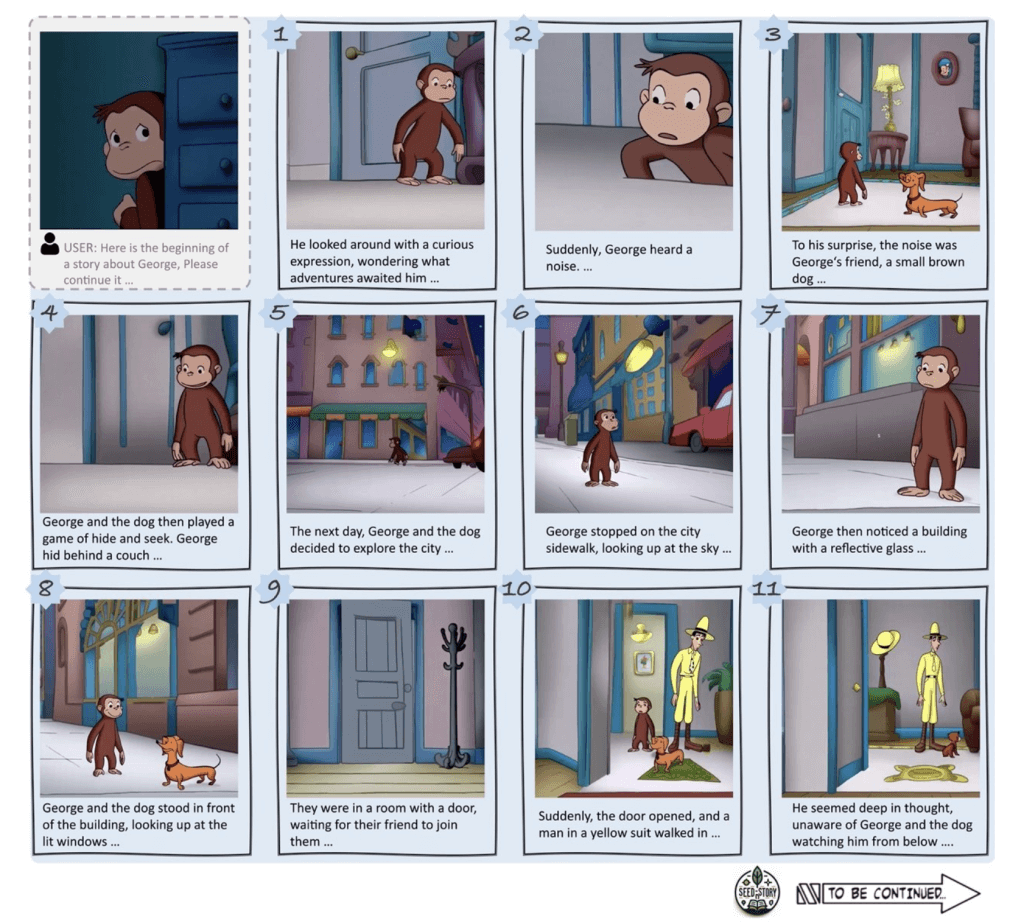

At the heart of SEED-Story is a powerful large language model (MLLM) known as SEED-X. The system is designed to take user-provided prompts—both text and images—and transform them into immersive stories that span up to 25 multimodal sequences. What sets SEED-Story apart is its ability to maintain character and stylistic consistency throughout the narrative, thanks to a sophisticated three-stage training and generation process.

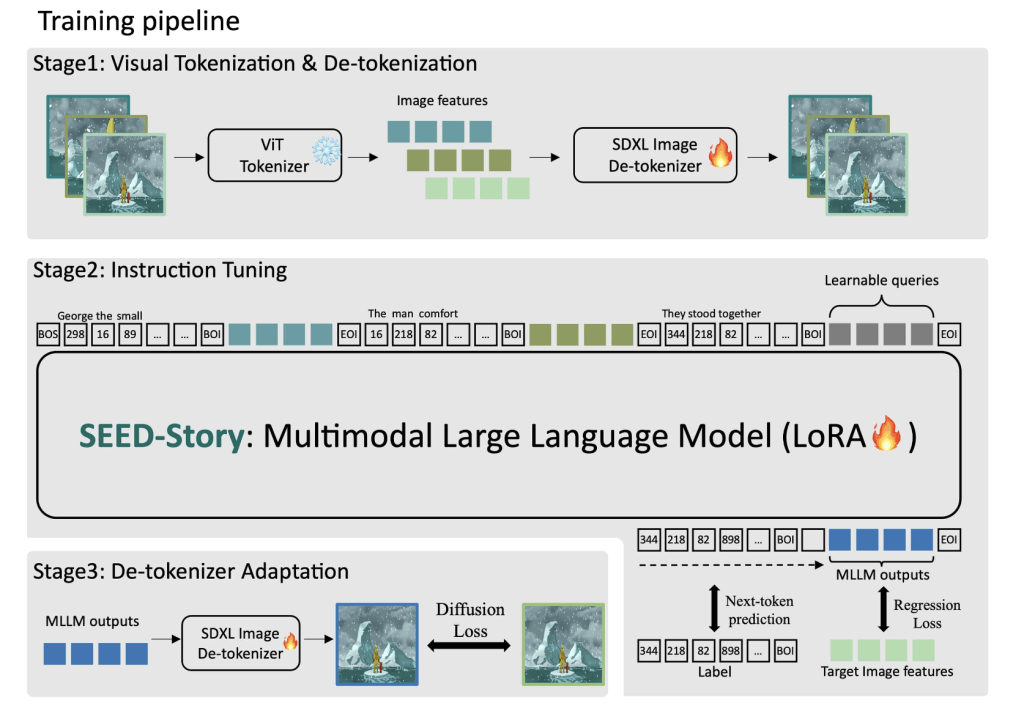

Stage 1: Visual Tokenization & De-tokenization

The first stage involves pre-training an SD-XL-based de-tokenizer to reconstruct images using the features from a pre-trained Vision Transformer (ViT). This step ensures that the images generated during the storytelling process are coherent and visually appealing.

Stage 2: Multimodal Sequence Training

In the second stage, the system samples interleaved image-text sequences of random lengths. The MLLM is then trained to perform next-word prediction and image feature regression. This involves aligning the output hidden states of learnable queries with the ViT features of target images, effectively blending text and imagery into a seamless narrative.

Stage 3: De-tokenizer Adaptation

Finally, the regressed image features from the MLLM are fed into the de-tokenizer for fine-tuning, further enhancing the consistency of the generated images. This adaptation ensures that the characters and styles depicted in the story remain consistent, providing a more immersive experience for the reader.

StoryStream: The Dataset Fueling Innovation

A crucial component of the SEED-Story project is StoryStream, a large-scale dataset specifically designed for training and benchmarking multimodal story generation. StoryStream includes three subsets: Curious George, Rabbids Invasion, and The Land Before Time. Each subset contains extensive data, including images and corresponding story texts.

Here’s an example of how the data is structured in StoryStream:

{

"id": 102,

"images": [

"000258/000258_keyframe_0-19-49-688.jpg",

"000258/000258_keyframe_0-19-52-608.jpg",

...

],

"captions": [

"Once upon a time, in a town filled with colorful buildings, a young boy named Timmy was standing on a sidewalk...",

...

],

"orders": [0, 1, 2, 3, 4, 5, 6, 7, 8, 9]

}Bringing Stories to Life: A Technical Journey

To generate a story with SEED-Story, users start by providing an initial image and text prompt. The system then embarks on a creative journey, crafting a narrative that evolves from the given input. Depending on the starting text, SEED-Story can produce different storylines from the same initial image, showcasing its versatility.

The generated stories consist of rich texts and images that maintain character consistency and style. This is achieved through a meticulous pipeline, integrating natural language processing (NLP) and advanced image generation techniques.

Here’s a glimpse into the inference process:

# Multimodal story generation

python3 src/inference/gen_george.py

# Story visualization with multimodal attention sink

python3 src/inference/vis_george_sink.py

The evaluation of the generated stories involves assessing image style consistency, narrative engagement, and text-image coherence. This rigorous evaluation ensures that the stories are not only engaging but also visually and narratively consistent.

A Future of Endless Possibilities

SEED-Story is more than just a technological marvel; it represents a new era in storytelling. By seamlessly blending AI-driven text generation with consistent visual content, it offers a powerful tool for creators, educators, and anyone passionate about narrative art. The potential applications are vast, from personalized children’s books to dynamic content creation for entertainment and education.

As AI continues to evolve, projects like SEED-Story will undoubtedly pave the way for even more innovative and immersive storytelling experiences. Whether you’re a researcher, a developer, or simply a storytelling enthusiast, SEED-Story opens the door to a world where imagination and technology converge, creating narratives that are as engaging as they are visually stunning.

For more information and to explore the SEED-Story project further, visit the SEED-Story GitHub page.